[HCI-Under] Assignment 2

I.

Google Photos Interface Review

What is Google Photos

Google Photos is a photo organization and sharing software developed by Google. First announced in 2015, it is a commonly used software among android users as it provides free and unlimited storage for photos and videos within a certain size and resolution limit.

Interface Review

Google Photos is a software that considers the demographic of its users. Its interface has been designed for people of diverse age, gender, and skill levels. The interface of Google Photos has been simplified so that even an inexperienced user may easily navigate and use the software. Upon opening, there is a search bar at the top, that also allows navigation to other options and menus, and four tabs at the bottom that highlights and indicates which feature the user is currently using. Almost all icons are accompanied by their text equivalents, so users do not have to wander. These interfaces that Google Photos provides also offers a sense of consistency and universality. The search bar is always present at the top where the 3-lined menu icon turns into a back button whenever it may be needed. The back button always appears on the top left of the screen even when using different features. The 3-dotted option button also always appears on the top right of the screen for the user to perform other tasks. Google Photos’ interface reduces memory load significantly while making navigation more convenient.

figure 1-1

figure 1-2

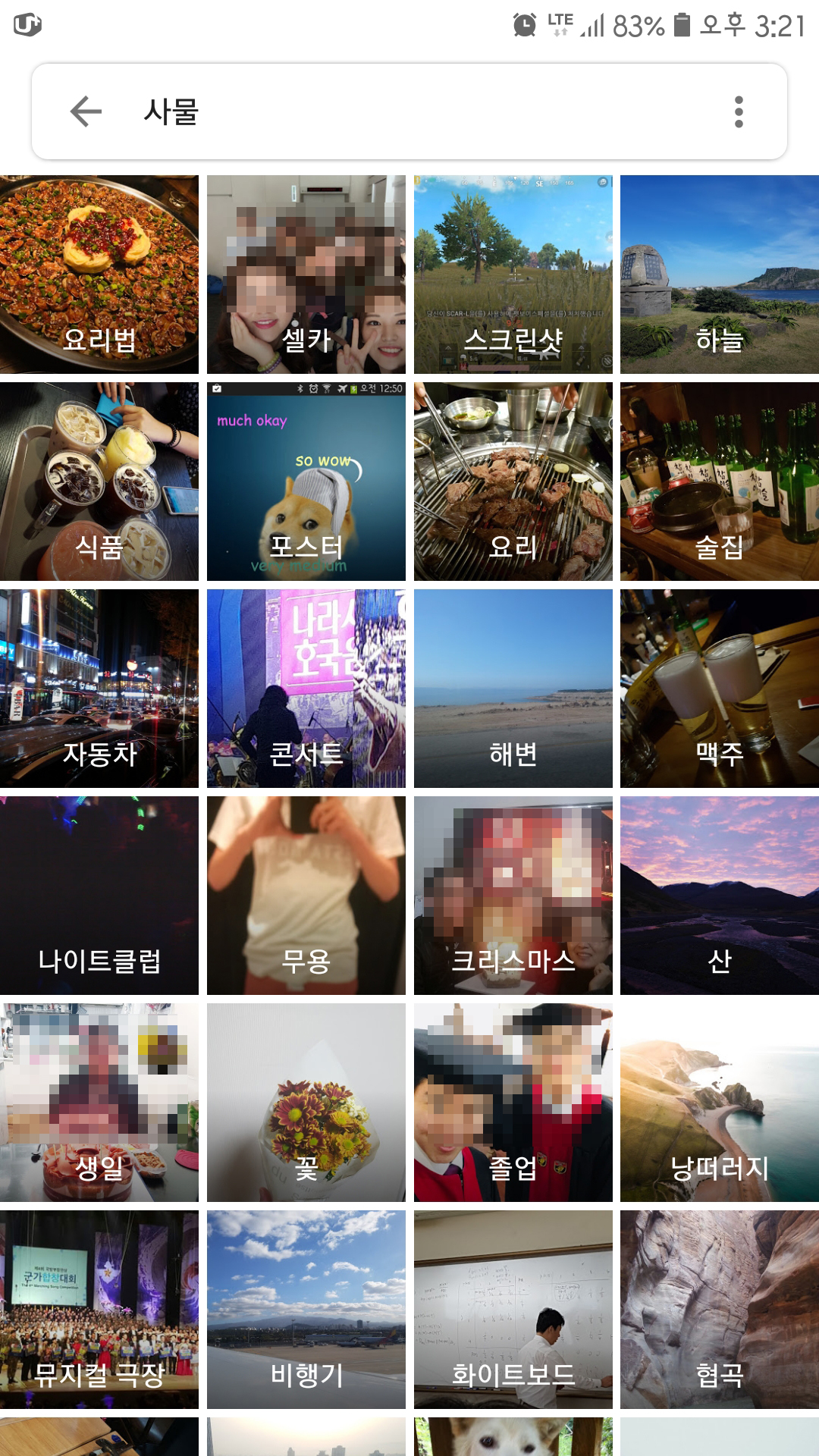

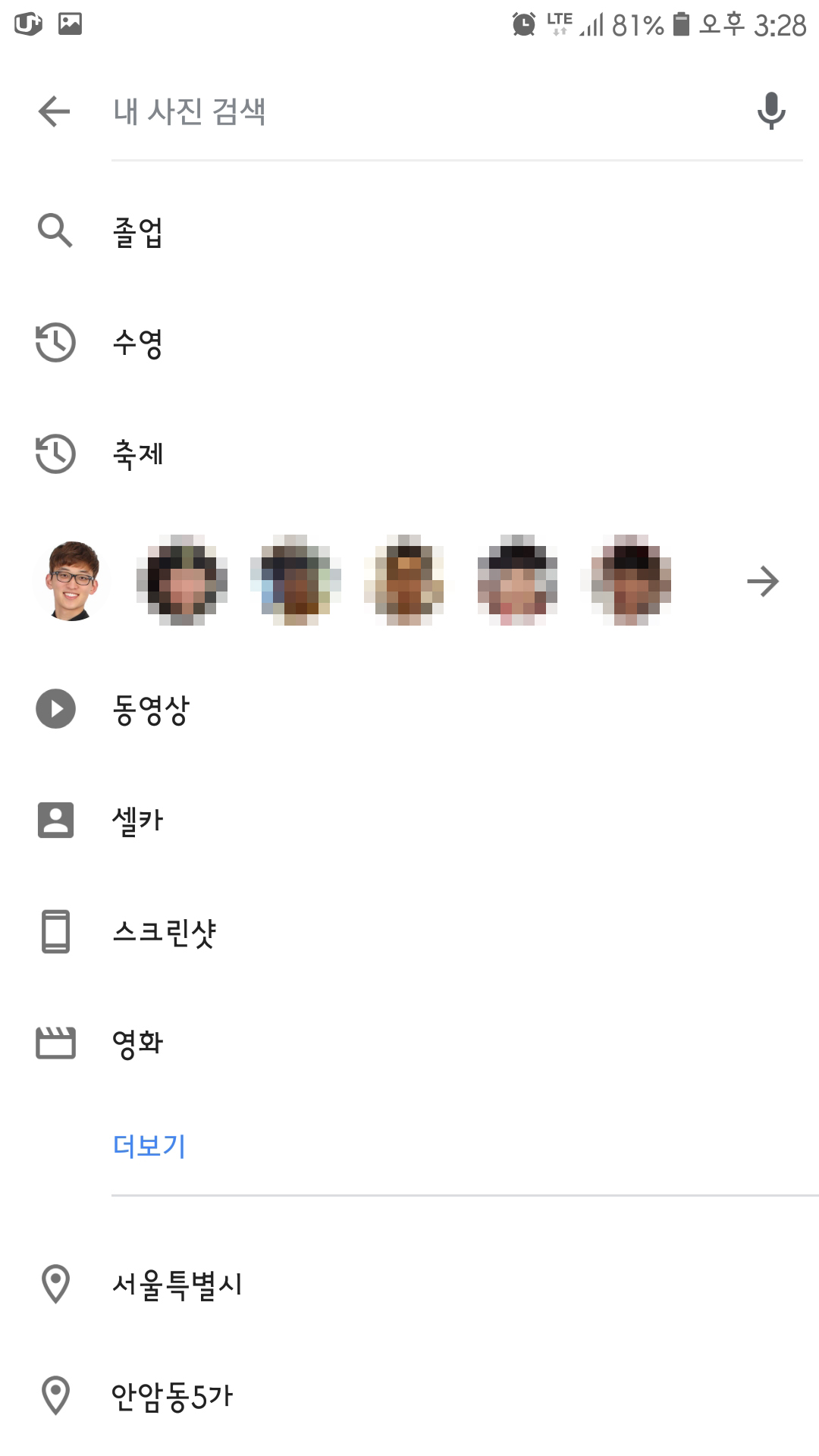

As a photo organizing software, Google Photos does its job well. Google Photos uses its own AI to organize the user’s photos. Upon opening, Google Photos shows the users photos and videos in chronological order. In the Album tab, Google Photos auto-generates several albums based on the time and place photos or videos were taken that the user may like to see at a later date (figure 1-1). Furthermore, Google Photos recognizes faces, objects, and places within photos and provides an option to search photos based on a face, location, and objects. Upon clicking on a face, location, or object, related photos of those people, location, or objects are listed and shown in descending chronological order. This makes photo organizing convenient and less tedious on the user’s end and does what it’s supposed to well.

Faces

Location

Objects

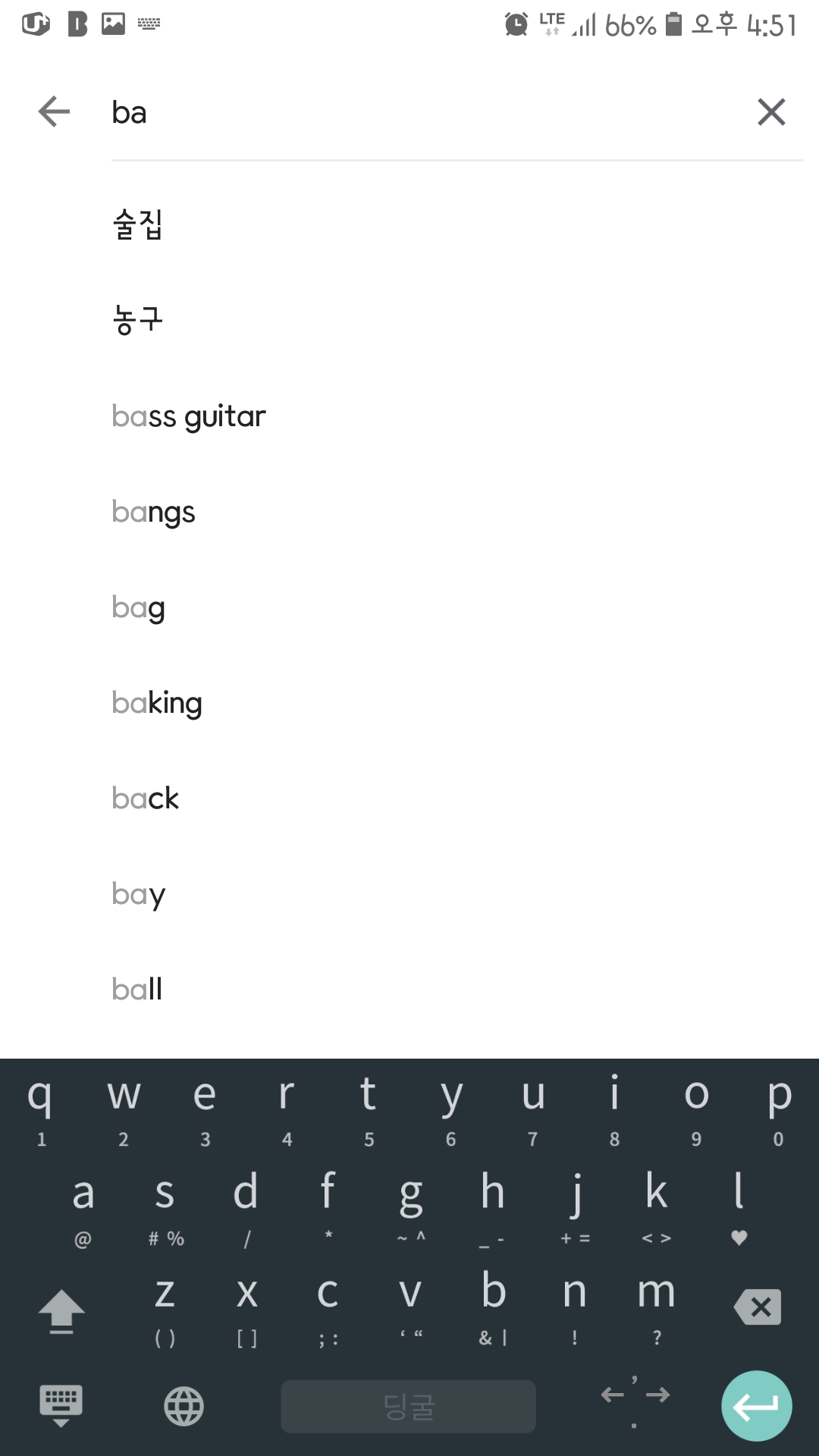

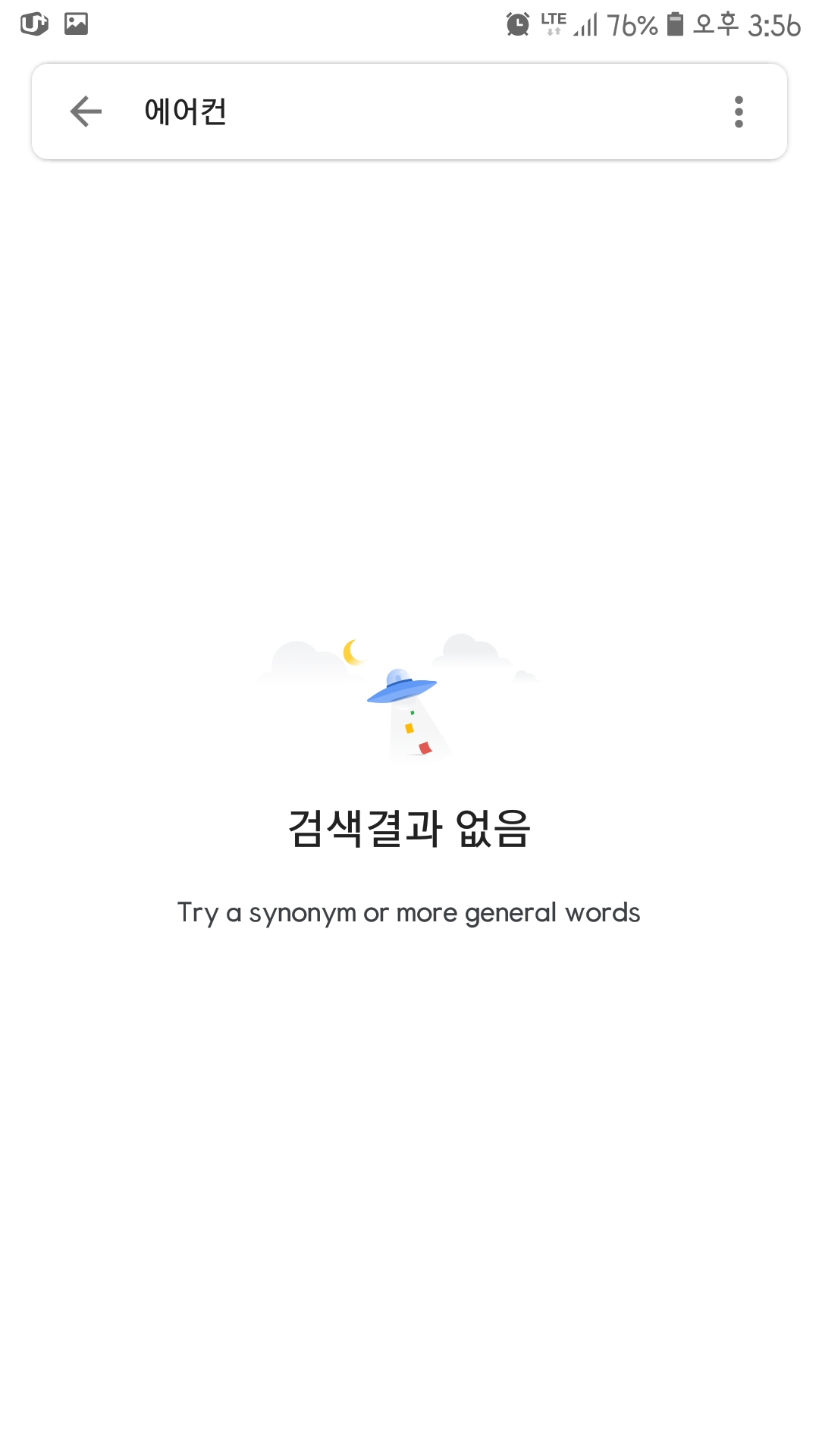

Google Photos also provides feedback and prevents possible errors. Upon using the search option, Google Photos recommends search terms to reduce the input time as well as possible typos (figure 1-4). Furthermore, if there are no search results based on the search term, Google Photos provides a closure of sorts, alerting the user there are no search results and informs the user of other ways to perform a search (figure 1-5).

figure 1-3

figure 1-4

figure 1-5

II.

Modeling Software Usage as Decision Trees

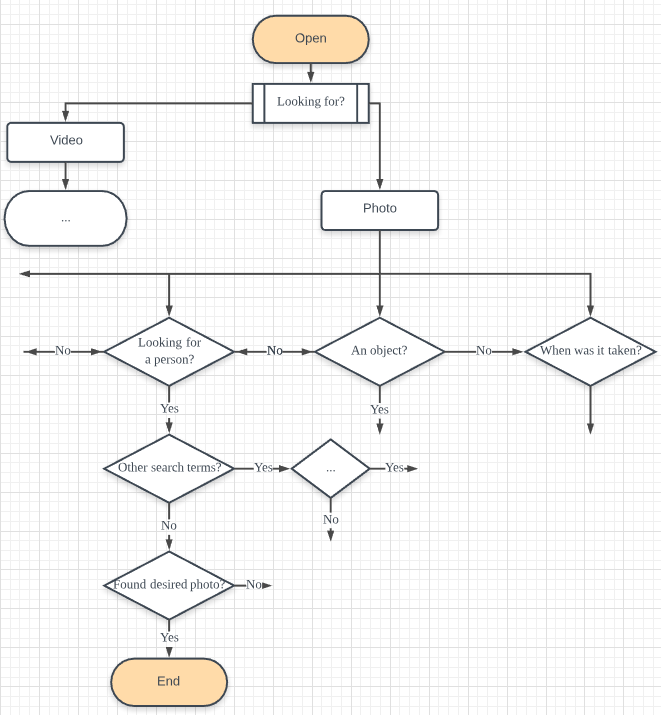

A decision tree is a tree-like graph that models something based on inputs and possible outcomes. It is usually used to model an algorithm that strives to achieve a goal. At first glance, a decision tree may be sufficient to model a picture organizing software, however when constructing a decision tree it becomes apparent that it is not the best option. One photo may contain more than one classifier to be categorized and organized. For example, a photo may contain a person with a dog next to a river. Even if the decision tree’s output is not binary but a multiple-choice answer, the contents of a photo may be complex, resulting in a very large and complex decision tree.

A proper model of software use (such as picture organization) should consider that a user’s choice is not binary nor multiple choice—it is complex and unpredictable to an extent. Instead of a sequential workflow model, a state machine workflow may be better to model a software. Depending on the user’s input in each state, the workflow may go forward, backward, or go back to itself to output desirable actions.

Example of Software Usage Modeled as a Decision Tree

III.

Applying GOMS to a Task

For a picture organizing software such as Google Photos, its users would most likely use it to search for photos or videos and save it to their device to share. Search for a picture, save, and share. With the simple task of searching for a photo and saving/sharing it, the applied GOMS methodology would be as follows:

1. Move eyes to location on screen | 1.35sec

2. Select search bar | 0.1sec

3. Type in search term | 0.22sec * n (where n is number of characters)

4. Search for photo (include scroll time) | 1.35sec + (n*0.9sec + l*0.16sec) | n: number of scrolls; l: length of scroll

5. Select photo | 0.1sec

6. Traverse to option button and click | 1.35sec + 0.1sec

7. Search for “Save” or “Share” and click | 1.35sec + 0.1sec

8. Select place to save, or app to share | 1.35sec + 0.1sec

9. Repeat if needed | *N

The total estimated time for the task comes to approximately 11.73seconds—where the number of scrolls was 2, length of scroll was 3cm, and task was not repeated with no real-world distractions. When I executed the task myself, the task took about 14.17seconds. The additional time was due to the time to change between Korean and English keyboard input and overall lagging of my phone. With all things considered, the GOMS predicted execution time was very similar to the actual execution time.

IV.

The System Architecture of Galaxy Note 9 and the S Pen

The Galaxy Note 9 has a display with a magnetic field that can detect the pen’s movement using EMR (Electro-Magnetic Resonance). The S Pen’s resonant circuit gets induced with small energy when it gets in close proximity to the screen. The S Pen itself has a button on the side which—paired with the S Pen’s Bluetooth module—acts as a remote control. The S Pen also has sensors that help pickup the pressure sensitivity, position, angle and speed of the S Pen.

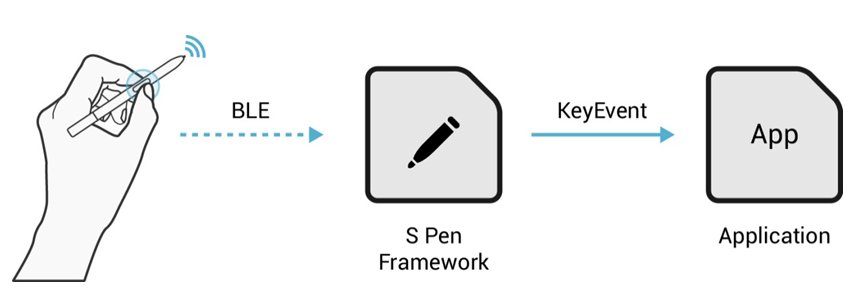

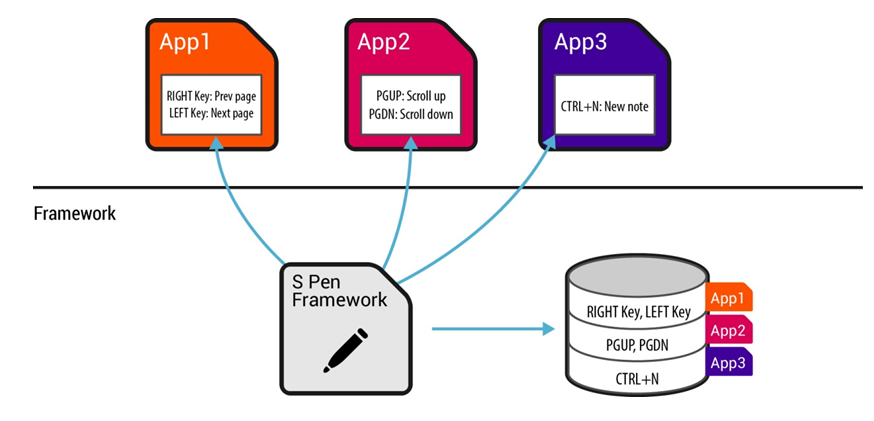

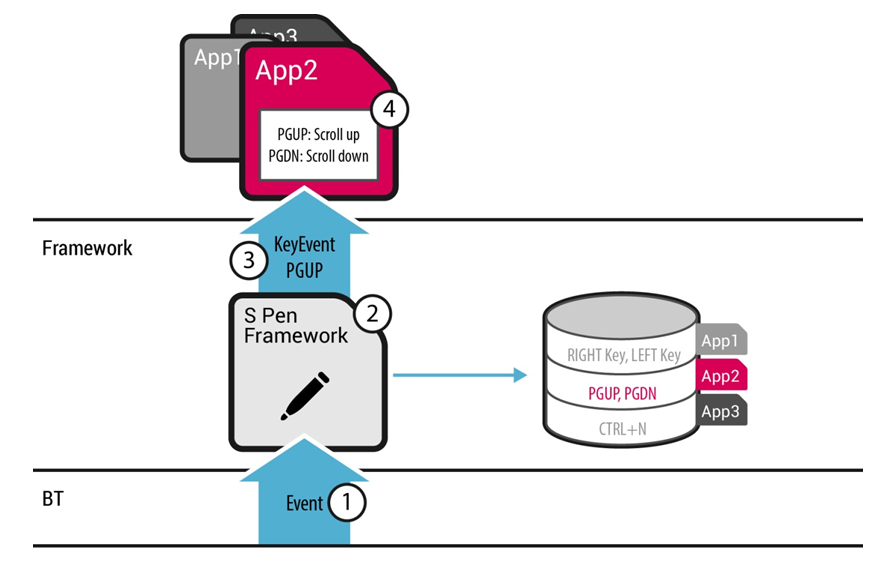

The S Pen to the Note 9 acts as a remote control for other apps. According to Samsung Developer’s Support guide, when pressing the button on the S Pen, the event is sent to the S Pen Framework which converts it into a KeyEvent then passes it on to apps to perform certain tasks. This allows apps to handle S Pen events without any additional interfaces. Different tasks may be set to different apps allowing the user to be fully in control of the S Pen and the S Pen Framework checks the apps and performs the KeyEvent. .

Use Case Scenario

S Pen Remote Events Collection

S Pen Remote Events Transmission